Comparison: Data pipeline and ETL pipeline.

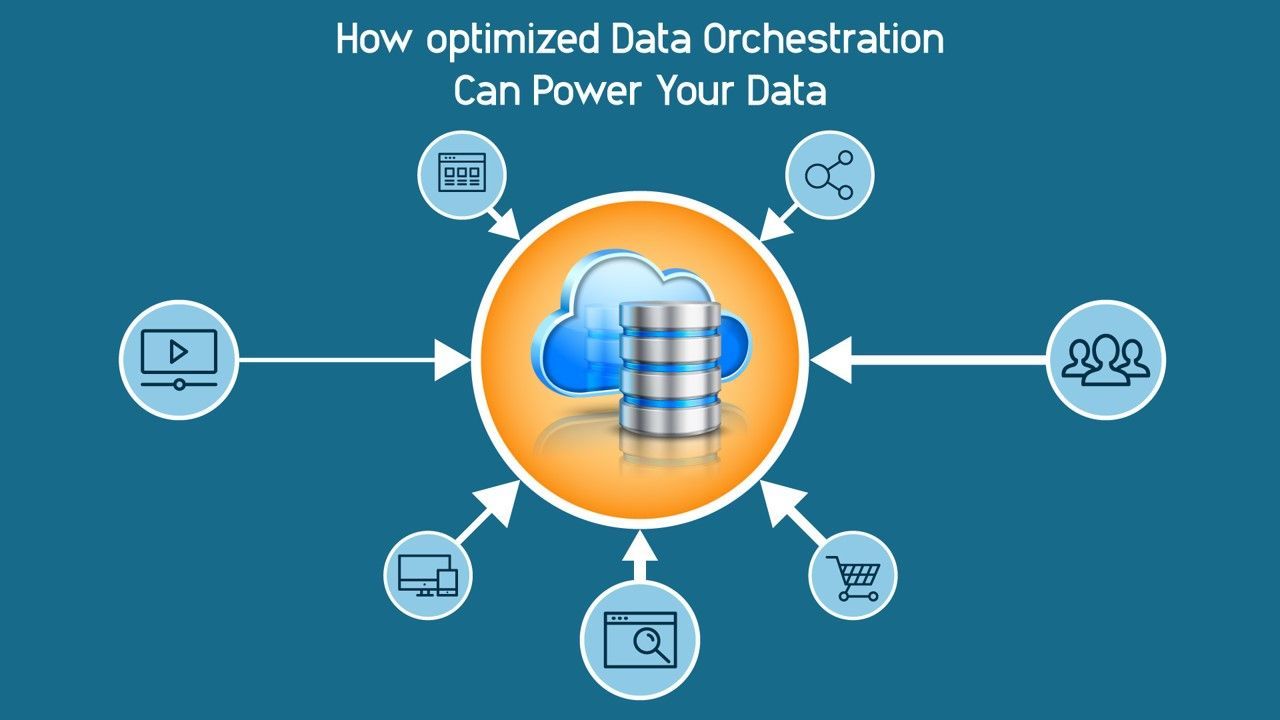

Data Orchestration

Data orchestration is a crucial component of the data journey for any business. This journey involves a set of workflows, including data collection from various sources, transforming data for use in analysis and products, creating reports, and loading the data into a centralized warehouse. Data orchestration automates these workflows and organizes data to enhance the process of business decision-making. When all the workflows of a data pipeline are orchestrated, it is known as data pipeline orchestration.

Phases of Data Orchestration

1. Preparation

This step ensures the integrity and accuracy of the data. Existing data is completed by adding third-party and label data with the respective designation.

2. Transformation

This step converts data into a standard format. For example, dates can be written in different formats, such as 20.02.2016 or 20022016. The transformation phase can convert these into one standard format.

3. Cleaning

This phase identifies corrupted, nan, duplicate, and outlier data, and then processes and corrects the data.

4. Synchronizing

This phase continuously updates the data as it moves from one source to another destination. It ensures that data consistency is maintained during orchestration.

The use of Data Orchestration

Data orchestration offers several benefits that organizations can leverage to improve their data management processes.

1. Scalability

Data orchestration allows organizations to scale their data sets by breaking down silos and acquiring data from various sources. This helps to increase profits and reduce costs.

2. Visibility and Data Quality

The orchestration process streamlines each step while catching errors. This allows for data monitoring and ensuring data quality.

3. Data Governance

Data flow is structured in data orchestration, and data from multiple sources is well-monitored according to data governance standards.

4. Real-time Data analysis

Data collected in data orchestration is directly available for use in data analytics tools. As the pipeline is automated, data is accessible as required with zero errors.

Data Orchestration Tools

1. Apache Airflow

Airflow pipelines are defined using Python code, allowing for dynamic pipeline generation. It enables building workflows that connect with Google Cloud Platform, AWS, Microsoft, and more. Airflow helps automate, schedule, and monitor workflows.

2. Shipyard

Shipyard connects your data tools, moves data between them, and alerts you if there are issues. It's a fully-hosted solution designed for data professionals of all technical backgrounds with a focus on low-code templates powered by open-source Python.

3. Metaflow

Metaflow is also built using the Python framework, increasing the productivity of data teams by building and managing real-time data. Metaflow is commonly used in developing and deploying machine learning and AI applications.

4. Stitch

Stitch specifies when data replication needs to happen and identifies and resolves any errors that arise in data pipelines while monitoring data replication progress.

ETL Pipeline

A data pipeline that follows the ETL process to migrate data is known as an ETL pipeline. Data inside the pipeline is collected from various resources and transformed into a standard data format before being stored in a destination, which could be an existing data storage such as a data warehouse, relational database, or cloud-hosted database. ETL pipelines have several use cases:

- Creating a centralized data warehouse to store all data distributed across multiple sources in an organization.

- Transforming and transferring data from one source to another.

- Enhancing the CRM system by adding third-party data.

- Providing access to structured and transformed data for pre-defined analytics use cases, creating stable datasets for data analytics tools.

An ETL pipeline ensures data accuracy and stores it in a centralized location, enabling access for all users. Data analysts, data scientists, business analysts, and other professionals can then use the stored data in their use cases, allowing them to focus on business work instead of developing data analytics tools.

Comparison: Data pipeline and ETL pipeline.

Even though an ETL pipeline is a type of data pipeline, the two pipelines have different characteristics, and ETL alone cannot support real-time data applications. The following are the significant characteristics that differentiate a general data pipeline from an ETL pipeline.

- An ETL pipeline always loads data to another database or a data warehouse, while a data pipeline ingests data into analytics tools instead of using a warehouse.

- Data pipelines can either transform data or not transform it at all, but ETL pipelines always transform data before loading it into the target destination

- ETL pipelines load data in batches on a regular schedule, whereas data pipelines support real-time processing with streaming computation and allow data to update continuously.

- ETL pipelines end the process after loading the data, whereas data pipelines continue to update and process.